he natural tendency when you hear about a scientific paper being

retracted for alleged fraud is to assume the person making the

allegations knows what they're doing and the perpetrator must be

guilty. You wonder why a scientist would do such a thing, make sure

you never cite their work, and move on.

he natural tendency when you hear about a scientific paper being

retracted for alleged fraud is to assume the person making the

allegations knows what they're doing and the perpetrator must be

guilty. You wonder why a scientist would do such a thing, make sure

you never cite their work, and move on.

That's what I used to think until it happened to a colleague of mine. This person works in a different department from mine in a different city, on a topic that's not particularly interesting to me, but she's a first-rate scientist: maybe not brilliant enough to make the intellectual leaps one needs to cure a disease in a single lightning stroke of genius, but highly talented and honest.

Then one day I learned that she had been accused of fraud. After investigating, I came to realize that all is not as it seems in the seamy world of these so-called internet sleuths.

Many of these ‘sleuths’ are not scientists at all, but hackers who go through the scientific literature looking for images—photographs of cells seen through a microscope or Western blots of proteins stained with antibodies—and run their software against them. If the software registers a hit, which is to say it finds a statistically significant similarity between two parts of an image, they have their quarry. Like the political activists who make a hobby of canceling their political opponents on Twitter, these hackers make a hobby of canceling scientists.

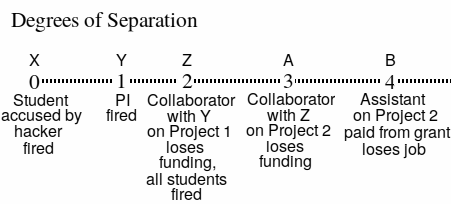

Because employment in academia depends on grants, an accusation, regardless of its validity, causes the loss of jobs at four or more degrees of separation from the original miscreant

Maybe they see themselves as doing a useful service. But their real purpose is obscure. As in the case of my colleague, the effect on science can be devastating, and the sloppiness of the hackers suggests that their real goal is something less noble than they claim.

I was sickened when I saw the article in Nature advocating a “bounty” to fund these hackers and reward them for finding flaws. Certainly flaws need to be corrected, but innocent mistakes are not what's at issue. What is really needed is a system for correcting them without destroying the careers of those who make mistakes, as well as the career of anyone who cited their work before the flaw was discovered. That is what is happening now: careers are being destroyed merely for citing work that is later found to have flaws.

A typical grant contains 200 or 250 citations. If just one of those citations turns out to be flawed between the time you submit the grant and the time, a year later, when the funding is disbursed, university bureaucrats will display their true nature and cancel the grant without your consent. Through no fault of your own, you lose years of work (and often your job) and anyone hired from your grant loses their job as well. Even worse, a promising treatment for a deadly disease is abandoned: without funding, the work cannot be done.

Of course we also need more reliable software that is open-source, not proprietary, and not based on fantasies like AI, but validated and proven both theoretically and empirically to be accurate.

If an article with a flaw is published, it is a failure of peer review. Peer review is the responsibility of the journal. It is the journal editors who should be fired, not some innocent lab assistant who never saw the paper and never heard of the author.

How a university responds to an allegation of wrongdoing

Universities are run by bureaucrats. Although some bureaucrats at the very top may have somehow acquired an MD or PhD degree, they are generally not scientists, and they have little understanding of image analysis. Typically they put together a committee whose main goal is to protect the institution from bad PR by taking no chances.

Peer review is the responsibility of a scientific journal. If a

flaw is found after publication, the journal editors should be fired.

In one published paper the authors reused an entire identical

row of loading controls in their Western blots, smudges and all, three times

in a single paper: resizing it in one image and squeezing it vertically while

rotating it 180 degrees in the other. Maybe the twelve co-authors and the

two or three peer reviewers didn't notice it. But it is the editors' job

to make sure they do. It was an obvious attempt at deception that should

have been caught in peer review.

Think about that: a scientist hires a bad student. Then not only is the scientist's career destroyed, but from then on the career of anyone who collaborates with the scientist, and anyone who collaborates with them, even on an unrelated project, is also destroyed. I know this from personal experience. I was aghast when I saw it happen. Those internet sleuths must be so proud.

The overall effect is to make it increasingly risky for scientists to do their job. A young person would be a damn fool to go into science in this environment.

False allegations

These ‘sleuths’ use a variety of software tools in their quest to destroy, but the tools all have two things in common. One, they are either non-transparent or poorly validated, which means the algorithms they use are either unknown to the user or inadequately tested, and two, they produce a ‘statistic.’

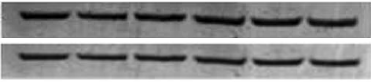

These two rows of fluorescent Western blots from different figures in this image pulled from the scientific literature, said to be loading controls, appear identical. Is it fraud? Researchers often ‘strip’ Westerns and restain them with different antibodies, so it's very possible for a single loading control to apply to two or more blots. Unfortunately, the caption says they're different proteins from different kinds of mice. Is it a labeling error then? No! They really are different blots. The explanation seems to be that the images were taken by the same camera and there was dirt somewhere in the optical path, possibly on a transparent overlay, in a camera that re-photographed the image for some reason, or maybe even on the original camera sensor. An object lesson about keeping your camera lens clean.

Strange things can happen to Western blots. One of my blots once fell out of the envelope I was carrying it in and got run over by a Jeep. In retrospect, maybe I should have gotten the license number just in case.

To a layman, a statistic is a magic number that means that something is true. Computer programmers and scientists all know this is not so. I can analyze an image that I know for a fact is genuine (because I produced it in the lab) and it can come out highly significant using one technique and non-significant using another. A statistic is not valid unless it's produced by a precise technique that has been proven to be accurate both on theoretical and empirical grounds. Until then, it's just a number.

An example is the statistic produced by the software used by the hacker who accused the discoverer of Aβ*56 of fraud, as detailed in that infamous Science article. I duplicated the hacker's algorithm from the description in Science magazine and found that, yes, it could produce a statistic, but it was also completely useless at discriminating between valid and invalid images, as I show here.

This is not to say that Aβ*56 is real. My opinion then as now is that it was a nonspecific band caused by a commercial antibody. Nonspecific bands happen when the antibody binds to something other than its target, and they show up frequently in Western blots. To show that they're a new form of your protein, you have to go through an elaborate and time-consuming process called immunoprecipitation followed by mass spectrometry. Almost no one does this because of the expense. Even if they do, there are still a million ways it could still be an artifact.

The point is that Science magazine fell for the questionable image analysis and accused the researcher of sending the Alzheimer's disease field on a wild goose chase. This is nonsense: the Aβ theory was on thin ice, where wild geese fear to tread, in those days, and remains so today. It was neither helped nor harmed by this finding, but that is a story for another article.

What are the effects on science?

There are many. Most are not good.

Institutions with weak leadership will lose ground to those who defend their employees against false attacks, as their faculty members practice defensive science.

The trend toward bigger collaborations will be reversed. If even one name on your paper is ever accused of fraud, it can destroy your career and the careers of all your collaborators. As scientists move to protect themselves against this very real risk, we will see more PIs preferring to work alone, which means slower and less successful science.

Students seeking positions in the lab will be hardest hit. My policy has long been never to let a student do anything in the lab. If I'm forced to take one, they get a project that is inexpensive and unrelated to anything important, and their results are stored in the bottom of a locked file cabinet after they leave. That goes double for med students and quadruple for medical residents. As far as MDs are concerned, fuggetaboutit.

Scientists will be forced to spend their time testing their own images to make sure they don't trigger whatever weird software the scientific journal uses. We have learned from the plagiarism panic that we have to spell our department name a different way each time and to re-write the Methods sections of our papers in pointlessly different ways to avoid accusations that we are plagiarizing ourselves. The image falsification panic is no different.

Scientists choose the journal to which they send papers with great care. Those journals that gain a reputation for making false allegations against scientists or which encourage others to do so will become pariahs and shunned by scientists.

Recommendations

Testing a scientific image is serious business. Hackers might claim they're only ‘flagging a potentially suspicious image,’ but in fact they're making a deadly serious accusation that will be acted upon with little regard for its merit. Editors and bureaucrats are terrified of these allegations. The ultimate effect is to prevent us from curing diseases. Will the hackers be sorry when they get a disease that could have been cured but wasn't? Of course not; they'll blame science for that.

Before attempting to analyze an image, a person must be intimately familiar with the algorithms used and their strengths and weaknesses. The analyst must work with the original full-size 16-bit grayscale (or 48-bit color) image. If this is not available, no valid conclusions can be made. No one should ever attempt to analyze images in JPEG format or other low-quality images, such as those appearing in online PDF files, which are almost always converted to 8-bit/channel RGB or 8 bit grayscale: it often happens that such images appear to be manipulated but the signs of manipulation disappear when the original is found.

Technicians and researchers need to be educated: as an image passes through software on its way to publication, it is resized, rotated, and converted to different pixel depths. While it looks the same, its dynamic range may be reduced from 65,536 to fewer than 100 intensity levels per channel. That can produce artifacts that appear deliberate and make it impossible to refute allegations of tampering.

There are three different ways of analyzing an image: by shape, by pixel value, and by artifact detection. Shape analysis uses a transform such as wavelet decomposition or PCA to simplify the image. Pixel value analysis, the most powerful method, compares the relationship among the pixel values, e.g., shades of gray, in the image, so it ignores the overall shape and catches common manipulations such as flipping and contrast changing. Artifact detection looks for telltale signs left by a miscreant by using smoothness detection or by applying a colormap to the image to make signs of pasting visible.

An analysis is not complete and is not valid unless all three techniques are used. We should remember that it is entirely possible for a computer to generate a fake image from thin air that passes every test. It is also possible for a bona fide, unretouched image to fail every test. At best, the analyst can look for tracks left by an inexperienced miscreant.

Only rigorously validated open-source image forensic software run by a person trained and experienced in image analysis is adequate for obtaining credible determinations about image manipulation. Any allegations made with proprietary software using unknown methods—or methods claiming to use overhyped techniques such as AI—should be dismissed for what they are: garbage.

Conclusion

All this points to a new risk in science: what happens if you cite somebody in a paper or grant and then a year or two later it turns out they faked the result? The answer, increasingly, is that you too are burned at the stake. And your patients? Sucks to be them.

aug 28 2022, 7:04 am. updated aug 29 2022, 4:26 am and aug 30 2022, 6:56 pm