any younger doctors practice probabilistic medicine. While the current

fashion is to rely on evidence-based medicine, in preventive medicine

doctors must rely on probability rather than evidence. Women are more

likely to get migraines, breast cancer, and anxiety disorders, so they're

more likely to be tested for them. Just as it's unlikely that

a male would ever be screened for breast cancer, it's unlikely that a

person under age fifty would be referred for a colonoscopy.

any younger doctors practice probabilistic medicine. While the current

fashion is to rely on evidence-based medicine, in preventive medicine

doctors must rely on probability rather than evidence. Women are more

likely to get migraines, breast cancer, and anxiety disorders, so they're

more likely to be tested for them. Just as it's unlikely that

a male would ever be screened for breast cancer, it's unlikely that a

person under age fifty would be referred for a colonoscopy.

This makes a certain amount of sense: testing is an expensive resource and shouldn't be wasted. But when there's a panicked rush to screen the entire population, we can run into trouble.

Clinical residents in particular, either from lack of experience or perhaps too much emphasis during training on evidence-based medicine, tend to overestimate the value of lab tests. A patient who comes in for a routine test, upon encountering a resident, will have his or her blood tested for dozens of potential conditions. If the test results “show positive,” the patient is often badgered by the physician with letters and phone calls telling the patient he has a fatal disease and must seek treatment immediately.

This may done with the best of intentions, but hospitals are blind to the fact that treatment can be as bad as the disease: a patient inappropriately getting radiation or chemotherapy will suffer terrible side effects from the treatment, including cognitive impairment and diminution of quality of life. Those undergoing surgery may lose their job when their employer decides their insurance costs outweigh their value to the company. And complications from surgery that occur days or weeks later can cause deaths that are unrecognized by the hospital: all they know is that the patient stopped making follow-up visits.

I've experienced this personally: One doctor insisted that I had myasthenia gravis, a rare autoimmune disorder that causes progressive muscle weakness, and that I needed my thymus gland removed right away. Meanwhile, my request for something to treat my migraines went ignored because, the doctor assured me, it was mostly mostly women who get migraines. That was twenty years ago and my thymus is still fine—and the migraines that I inherited still plague me.

An acquaintance of mine had digestive problems, so the doctors decided for reasons that are unclear to me that they needed to remove her stomach. They did so, but her digestive problems continued, so they started removing more and more of that poor lady's digestive system. Another friend of mine died of pneumonia after going in for knee surgery. Then there was the time somebody misdiagnosed a mosquito bite as cancer. No doubt most people can think of similar incidents.

These medical errors point to the importance of avoiding probability-based medicine by doing clinical lab tests, if such tests are available, before making a diagnosis. Patients with myasthenia gravis, for example, have antibodies in their blood against various proteins, such as titin, ryanodine receptor, or the acetylcholine receptor. In science we always try to obtain two independent lines of evidence for our finding. That's a good model to follow: young doctors need to be taught that clinical lab tests that conflict with the patient's symptoms need to be viewed with great skepticism.

Accuracy and specificity

A clinical test is evaluated by its accuracy and by its specificity. A test that is 90% accurate and 90% specific is generally considered good: only ten percent of the patients who have something else will be misdiagnosed, and only ten percent of patients with the disease will be missed.

False positives caused by mass screening

Low specificity is a major problem when the disease is diagnosed using biomarkers. A test for some neurological disease that only measures tau, a protein that indicates loss of neurons, will detect a variety of disorders. Such tests are routinely proposed in the scientific literature, and they are rightly viewed with skepticism. Even worse are tests based on biomarkers selected probabilistically by neural networks or transcriptomic screening. I have seen people go so far as to obtain CLIA certification (a difficult and expensive requirement by the FDA for a diagnostic lab) for such tests, never considering that a test without a solid mechanistic basis in pathogenesis is almost certainly a statistical fluke.

Equally dangerous is when a doctor makes a conclusion based on probability, or when mass screening is done. In these cases, even when the test makes biological sense, the deck is stacked heavily against the patient.

This is especially true when you try to screen the whole population. You may remember all those big mammogram screening vans that used to drive around giving women free mammograms. Those vans are now gone because we now know they were generating huge numbers of false positives. Women are quite reasonably terrified of breast cancer, and (as was the case with actor Angelina Jolie) may demand treatment or even surgery on the basis of a genetic test or some other test result just to be safe.

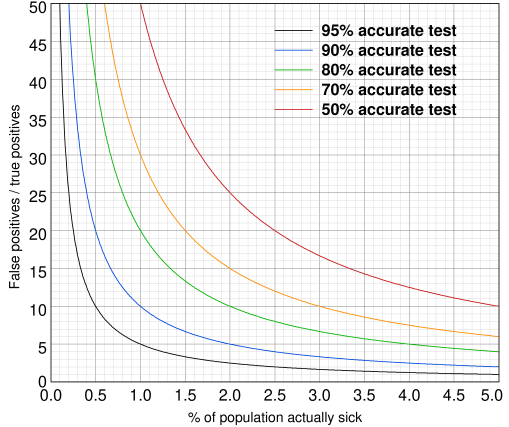

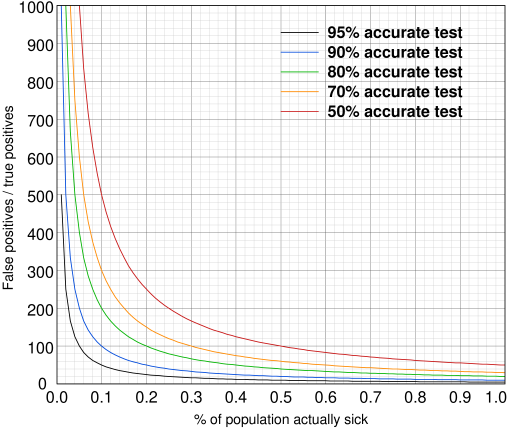

A simple calculation puts numbers to these ideas. As the graph below shows, even a 95% accurate test, which is considered excellent, produces vast numbers of false positives when applied to the wider population. If everyone who is tested has the disease, the accuracy approaches the theoretical value of 95%. If only 1% of the population tested is actually sick, there will be 5 times as many false positives as true positives. If 0.1% are really sick, false positives outnumber true positives by 50 to 1. As the graph shows, even when 5% of the tested population is really sick, the ratio of false positives to true cases is 1:1, indicating that the test is no better than flipping a coin. In the worst case scenario, if only 0.01% are really sick, the ratio is 500 to 1 for a 95% accurate test and 3000 to 1 for a 70% accurate test.

You can calculate this yourself from the formula

false/true = (1 − a) × n / ntrue

where a = accuracy of the test between 0 and 1, n = number of people

screened, and ntrue = the number of people who are actually sick.

False positives caused by mass screening: enlarged version

As ntrue approaches zero, the curve shoots toward infinity. You can verify this in your head: if no one is actually sick, then the test would still show that 5% are sick and the ratio of false positives to true positives would be infinity.

You might argue that this is not really a problem, as the test can be repeated or “confirmed” by some other test. Unfortunately, this is all too often a myth. When the test is repeated using the same method, the chance of a false positive rises, and the chance of a false negative rises as well: in our 95% example, false negatives rise from 5% to 10%. For a 70% accurate test, false negatives rise from 30% to 49%, becoming equivalent to flipping a coin. Only when a fundamentally different test is used to confirm, assuming a good one exists, can we cut down on the risk. Even then, in this age of defensive medicine, a doctor takes an enormous professional risk diagnosing against a positive test result.

It doesn't help when the only way to confirm the diagnosis is to proceed with surgery, or when public health authorities decide that the test is “the gold standard.” In this case, doctors are under even more pressure to report a positive test as a “case.” This inaccurately inflates the number of cases, taking resources away from other health issues. That is the real danger of aggressive preventive medicine.

Most people, hearing this during our Covid pandemic, will think of the Covid tests, whose accuracy is in dispute. But the issue goes way beyond Covid. The problem of clinical tests producing false positives is well recognized in the medical literature. Doctors just need to pay more attention to it. And it's another reason why paywalls blocking public access to vital health information need to be abolished.

mar 21 2021, 8:32 am