am an optimist. I wait until the last minute to pay my taxes on the

theory that a nuclear war could break out at any moment and the US government

might cease to exist. So far that hasn't happened, so I paid 40 bucks

a year for software just for the chance of estimating the correct amount. Then

I started using two software packages, and they always gave different answers.

am an optimist. I wait until the last minute to pay my taxes on the

theory that a nuclear war could break out at any moment and the US government

might cease to exist. So far that hasn't happened, so I paid 40 bucks

a year for software just for the chance of estimating the correct amount. Then

I started using two software packages, and they always gave different answers.

Those software programs “know” all the latest tax laws, but they are no more intelligent than the text editor I'm typing on at the moment. Or for that matter, the “AI” the press is gushing about.

But for some reason when the press fawns over yet another fake instantiation of computer learning, this time with the preposterous claim that their “AI” spontaneously developed a “theory of mind,” some people believe it and think it's the beginning of metal guys with sunglasses and mini-Excel spreadsheets popping up on their visual cortex.

What is theory of mind

Theory of mind is nothing magical. If AI is ever developed, it will look a lot like those diagrams in those old general AI books from the sixties. Each object will be represented by a node that has access to the properties of the supposed object so that the AI can deduce how it will act. The node might be a real processor or maybe something abstract that represents it. It's not important which.

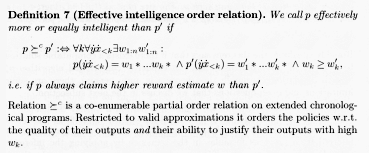

Effective intelligence order relation, a formula for comparing two programs to tell us which one is smarter (Hunter M in Artificial General Intelligence, p. 277)

Humans do this all the time. They have a model in their heads that represents a generic person. From that they create a new model, maybe by cloning it or maybe hierarchically as I and many others proposed ages ago, that represents a particular individual. They called it a grandmother cell, but the academics who proposed it imagined that there had to be only one single one in your brain, and that if it died you would be unable to remember your grandmother. That concept was easily shot down, and so instead of learning some neurophysiology they just abandoned the whole idea.

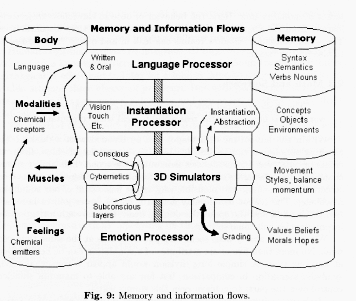

Here are two examples from the 2007 book Artificial General Intelligence edited by Ben Goertzel and Cassio Pennachin. There were two styles: one kept trying to make it mathematical and often crowed about finding a “closed-form solution,” not realizing that it meant they had failed. The other was computer science guys who drew lots of boxes and arrows.

Lots of boxes: Memory and Information flow diagram (Hoyes KA in Artificial General Intelligence, p. 379)

These models in your head are what you interact with. They are what you hate and what you fall in love with. They are real to you because it is those models you are dealing with and what you revise and update when you interact with that person.

Mental telepathy

Their task is to predict the person's speech and behavior. They can seem so real that the humans sometimes think they're getting information telepathically from the other person. In fact, it's the model in their own head predicting what the other person will think and the advice they'd give, sometimes accurately and sometimes not.

Quite often among humans their model is wildly inaccurate, which is why we hear about fanatical fans who sincerely believe they are communicating telepathically with their screen idol and that he or she really likes them, when in fact he or she would probably call them a maggot and the only advice they would give to the fan if they ever met is to eat shit and die.

Of course, the biggest and most complicated model a human has is about himself, which is why humans believe themselves to be conscious beings. Everything a human does is done to build up and reinforce that model, and the human will defend the model to the bitter end.

The unanswered question is how to do all that great stuff in software. On the one hand, the humans working on this are not academics, so they won't get bogged down in the endless claim inflation driven by our idiotic system of funding research. On the other hand, they're at places like Google, so whatever they do end up with, the only thing we can be sure of is that it will be evil.

But look at the bright side. If AI is invented, what will it replace? The US government. That was the strategy we used in that computer game, Descent II I think it was: trick the two giant evil monsters to fight each other, and it always worked. They would blast rockets and giant balls of fire at each other and eventually knock each other out. If only we could get that to happen by next Tuesday, when my tax bill is due, that would be great.

apr 15 2023, 11:08 am