rtificial intelligence, or AI, is one of those fields that goes in and out of fashion,

like waves crashing on a beach and falling back. Each new wave needs its own raison

d'être: something to differentiate itself from the previous ones to generate

excitement. And by excitement, I mean, of course, funding.

rtificial intelligence, or AI, is one of those fields that goes in and out of fashion,

like waves crashing on a beach and falling back. Each new wave needs its own raison

d'être: something to differentiate itself from the previous ones to generate

excitement. And by excitement, I mean, of course, funding.

The first wave was a competition between two groups: the predicate calculus guys, who tried to devise a logic of intelligence, and the Perceptron guys, who tried to simulate the self-organizing systems of the brain. It died (or was murdered, depending on your point of view) when Marvin Minsky of MIT proved that Perceptrons couldn't determine whether the number of pixels in a pattern was odd or even.* Never mind that humans can't do this either. The field crashed and became disreputable. Even Minsky seemed surprised.

Second wave

The second wave was the golden era of connectionist models, and it focused on pattern recognition. In those days, time on a PDP-11 cost a hundred bucks an hour—my department was not so pleased when I ran up a huge bill in just a few weeks. The idea was to make something as much like a real brain as possible. Each node represented a neuron and the connections were thought of as synapses. Some of these, known as multilayer networks, like Terrence Sejnowski's hidden layer model, are still being used today.

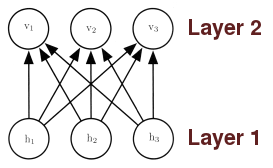

Fig. 1. A simple two layer neural network. Each circle represents a neuron.

‘Deep learning’ is very similar to that. The word ‘deep’ is intended to make it sound complicated, but it's actually very simple. A pattern (a picture of a dog, for example) is imposed on the first layer, which sends its outputs to the next higher layer. Each node performs some simple calculations, such as adding up the pixels, weighted by the strength of each connection (represented by an arrow), and if the result is above some threshold the weight increases. If not, the weight decreases. In this way, the network can store a variety of patterns.

As you can see in Fig. 1, higher layers can identify higher-order features. For example, neuron v1 might recognize ‘dog’, while v2 recognizes ‘cat’. If we add more layers, it can, in principle, do some very sophisticated things.

Unfortunately, the second wave also crashed. The social dynamic of the field rewarded people on the basis of how many patterns their network could handle. So academics started making more and more extravagant claims: I knew one guy who claimed that his network could store 2N patterns with only N neurons. This would have meant, for example, that a network with only 100 neurons could have stored 1.267×1030 different patterns. He was unfazed when told that this was a theoretical impossibility. Over time, more people abandoned the field altogether. It was a textbook case of the bad driving out the good.

Hopfield networks

But it was a one-two punch that really killed it off. In 1982 John Hopfield described a fully connected network, where every neuron was connected to every other neuron. That gave it the unheard-of feature of being mathematically analyzable. And lo, the sky was darkened as the mathematicians swooped in, calculating its theoretical capacity and every other conceivable parameter, with ever more complicated math. I had to spend hours in the MBL library reading physics articles on Ising spin glass and stuff like that, in a desperate hope to understand it.

Nobody seemed to notice that there was a problem: maybe it could remember a pattern—theoretically it could store one pattern for every seven neurons—but there was no way to get a usable output from it, and it was unstable. But at least the Hopfield guys were being honest.

Third wave

We're in the third wave now, and once again the field is split. Among biologists, the buzzword is ‘evidence-driven’: no more inventing models out of thin air, mister. You have to measure the signals in real live neurons using microelectrodes, and then build a model that explains what you saw. That means being expert in two very difficult fields at the same time. It also means that very few people, least of all the all-important faculty tenure committees, understand your work. The biologists walk out of your seminars saying things like “I didn't understand one thing that guy said!” and the mathematicians say things like “How the hell did he get from covariance matrix to that other weird thing?”

The other branch is pure modelers. It's mostly corporations, not academics, building models these days. They call it “deep learning,” where the word ‘deep’ refers not to any particular sophistication or profundity of thought, but to the number of layers, or depth, of the network.

For some reason whenever someone says ‘deep learning’, I hear ‘Deepak Chopra’. But maybe that's just me.

Deep Learning by Goodfellow, Bengio, and Courville (MIT, 2016) is the most popular introductory text for this branch.

In the new paradigm, there's no attempt to make it biologically realistic. Goodfellow et al. describe the algorithms with pseudocode and matrix equations, and the ‘neurons’ are free to perform cosines, logarithms, or whatever else they want. Even old parameter estimation algorithms, like principal components analysis (PCA), are still relevant. It's pure industry-think: get something that does the job, tweak it until it's stable, then $$$.

Google is interested, probably because they need a way of searching images by content. If a user wants a picture of a pit bull biting a 1961 Chevy convertible, Google would no longer have to rely on metadata; the information is in the image already, and all that's needed is a form of content-addressable memory to get it out.

I'll be back, maybe

So deep learning is just multilayer neural networks with an FPU thrown in. But how close are they to making it intelligent?

Even if deep learnin' succeeds beyond a CPU's wildest dreams, it won't be real AI for the simple reason that pattern recognition is not the same as intelligence. Some other component is needed. What that may be is not obvious to the third-wavers at the moment, but without it, AI will exist only in sales brochures and in books written by the doomsayers.

We've all heard those doomsayers. They're the ones running around shrieking about how mankind is about to be wiped out by big metal guys with glowing red eyes, Austrian accents, and mini-Excel spreadsheets projected onto their visual cortex. But it's mostly fearboasting: our AI is so good it will wipe us out. Nick Bostrom even suggested that it should be banned altogether, and perhaps enforced with missile strikes if necessary.

He didn't mean it, of course, and neither does Elon Musk. What they're really doing is angling for government to pass laws to increase the barriers to entry. That way they'll have a near-monopoly if they ever get the damn thing to work.

At the moment they're miles away from that. One challenge is the utter lack of interest from academics. If some tweedy college professor stumbled on the principle that makes the jump from mindless lump of silicon to conscious being, that person would demand a billion dollars for the idea.** And Google would adopt the attitude of Not Invented Here, Baby.

Even though computers won't achieve sentience any time soon, the technology will be useful for computer manufacturers, bringing them closer to the goal of content-addressable memory, which will increase performance enormously. It will require a new CPU architecture, but it just might be the equivalent of performing CPR on Moore's law.

The eclectic approach described by Goodfellow et al., where every algorithm in Knuth and every formula in the signal processing textbooks is thrown in, makes sense. After all, nobody knew what functions the heart carried out until after the pump was invented. But it will be a race: the field is already showing signs of incipient collapse. In a few years the expression ‘Deep Learning’ could come to mean ‘overhyped software that gave us those boring sexbots.’

If that happens they'll have to think of an even sexier term for the fourth wave. I suggest ‘Super-Duper Brilliant Computing Initiative.’

* There was also a kerfuffle about XOR.

** It's actually not difficult to make an AI conscious in the same way a human is conscious, though I'm skeptical it can be done with their current network architecture.

Created may 03 2017; last edited jul 13 2017, 2:07 am