t sounds like science fiction: In a world where computers tell everyone

what to think, there is one man who uses his skill at programming multiple

inheritance class hierarchies and type safe pointer conversion in C++ to fight

back against tyranny!

t sounds like science fiction: In a world where computers tell everyone

what to think, there is one man who uses his skill at programming multiple

inheritance class hierarchies and type safe pointer conversion in C++ to fight

back against tyranny!

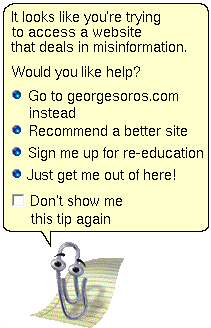

We always knew Clippy was a communist.

Yeah, well, guess what?

Suppose I put a routine in my software to detect who's using it, and if I disapproved of what the person was doing, or if I just disliked them, the software would delete some of their files. It would be called malware, and rightfully so.

Or suppose I manufactured a TV that would refuse to let you watch any programs that presented opinions that I disagreed with.

This is basically what Mozilla, the creator of Firefox, is planning with Mozilla Information Trust Initiative. It turns out that censoring our Internet searches and banning Twitter, Facebook, and YouTube customers based on their political views is not enough. They want to bake their politics into their software.

Don't kid yourself. This isn't really about fake news. Big Internet corporations want to influence your opinions, purchasing habits, and voting preferences by controlling what you see.

Their sales blurb makes it sound fun and exciting:

Mozilla's Open Innovation team will work with like-minded technologists and artists to develop technology that combats misinformation. Mozilla will partner with global media organizations to do this, and also double down on our existing product work in the space, like Pocket, Focus, and Coral. Coral is a Mozilla project that builds open-source tools to make digital journalism more inclusive and more engaging.

Gadgets.ndtv.com says that lefty financial disrupter George Soros is behind it:

The “Mozilla Information Trust Initiative” comes as an automated real-time fact-checking engine developed by the Full Fact foundation was demonstrated in London.

The group, which is backed by Omidyar and Hungarian-born investor and philanthropist George Soros, said its software is capable of spotting lies in real time, and was used to fact-check a live debate at the House of Commons.

It remains to be seen what form this will take—maybe it'll just die a painful death—but remembering that Mozilla is the company that fired Brendan Eich, the creator of JavaScript, for donating $1000 to a cause they disliked, we can make a pretty good guess.

You'll do a search and discover that the one site that knows the truth about Raspberry Pi or black holes doesn't even show up in the results. Or the software might decide not to let you see it. Maybe an animated paper clip will show up, or maybe a scary red warning screen with a button will say “Get me out of here!” as it currently does when it encounters an encryption certificate that's not signed properly.

Example: Fake mayonnaise Last week one magazine

claimed

that millennials hate mayonnaise. Many millennials went online to deny it. Is this

fake news? Who is to decide?

Last week one magazine

claimed

that millennials hate mayonnaise. Many millennials went online to deny it. Is this

fake news? Who is to decide?

And don't get me started about the misinformation in some of those science documentaries on TV. . . .

Or maybe, as typical of Firefox, it will just hang and spin the little icon around endlessly in circles . . .

This goes beyond politics. It is fanaticism. It is also bad computer science. Just the suggestion that a browser could someday classify and block access to content based on the political views of the programmer would be reason enough to dump it.

What's next? Cell phones that automatically drop calls when the person you're talking to claims that Marconi invented radio instead of Tesla? Self-driving cars that refuse to go to Chick-Fil-A restaurants? Super-chromatic peril-sensitive sunglasses?

A good way to think about these things is the substitution trick: how would you feel if the government was doing this?

This is immensely dangerous. It is theoretically impossible for any computer algorithm to determine whether something is true or false. That means that some person will have to decide for you. The nature of truth is that it's easily possible for one source to be correct and all the others wrong. In fact, this happens all the time in science—it's how science progresses. If this fad catches on, progress toward understanding the world would grind to a halt.

It's also a great way to ensure that conspiracy theories go mainstream. The Soviet Union showed us that when news is curated and filtered, people automatically assume that everything that is published is a lie. They will assume that what they're not allowed to see is the real truth.

If nothing else, it highlights the real risk of AI. Robots won't be running around with ray-guns trying to kill you. They'll just lie a lot.

aug 19 2018, 6:42 am. Note box added aug 19, 9:30 am. Last edited aug 20 2018, 6:56 am